Description

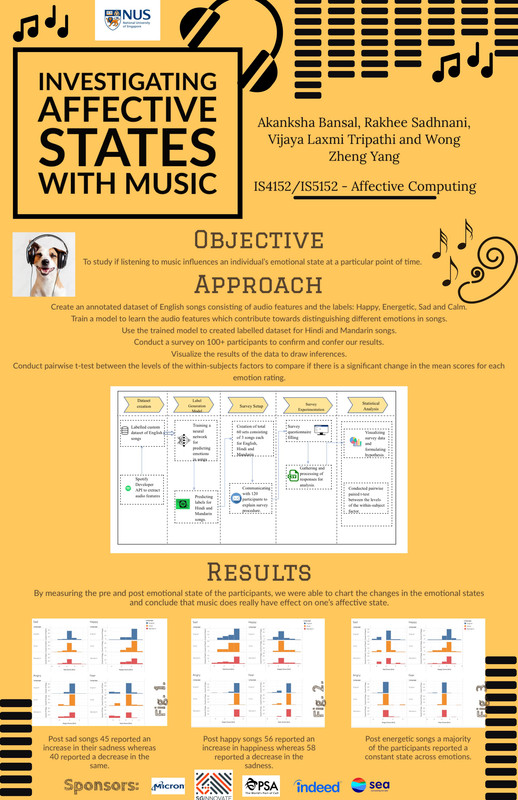

Research into the affective content of music that can be observed through multiple emotion recognition techniques that include expressions, facial images and physiological signs. Our study aims to study the effect cross-cultural songs have on a group of individuals by getting them to participate in our experiment and subsequently self-report their emotions. In our paper, we propose to investigate the effect music has on an individual’s emotional state at a particular point in time. We aim to uncover these relations through computational models and this would be achieved firstly by constructing a database comprising of a collection of songs that are cross cultural, basically English, Chinese and Hindi music excerpts. Emotion labelling to classify the audio clips will next be performed as part of feature extraction, besides the standard acoustic features by using a mix of supervised machine learning techniques and deep learning models. The classified songs will then be presented to participants undertaking our experimental study to identify the changes triggered in their emotional state. Post listening, they would be asked to fill out a survey form wherein the responses aggregated will be used to create a predictive model in predicting emotion changes for any given person.